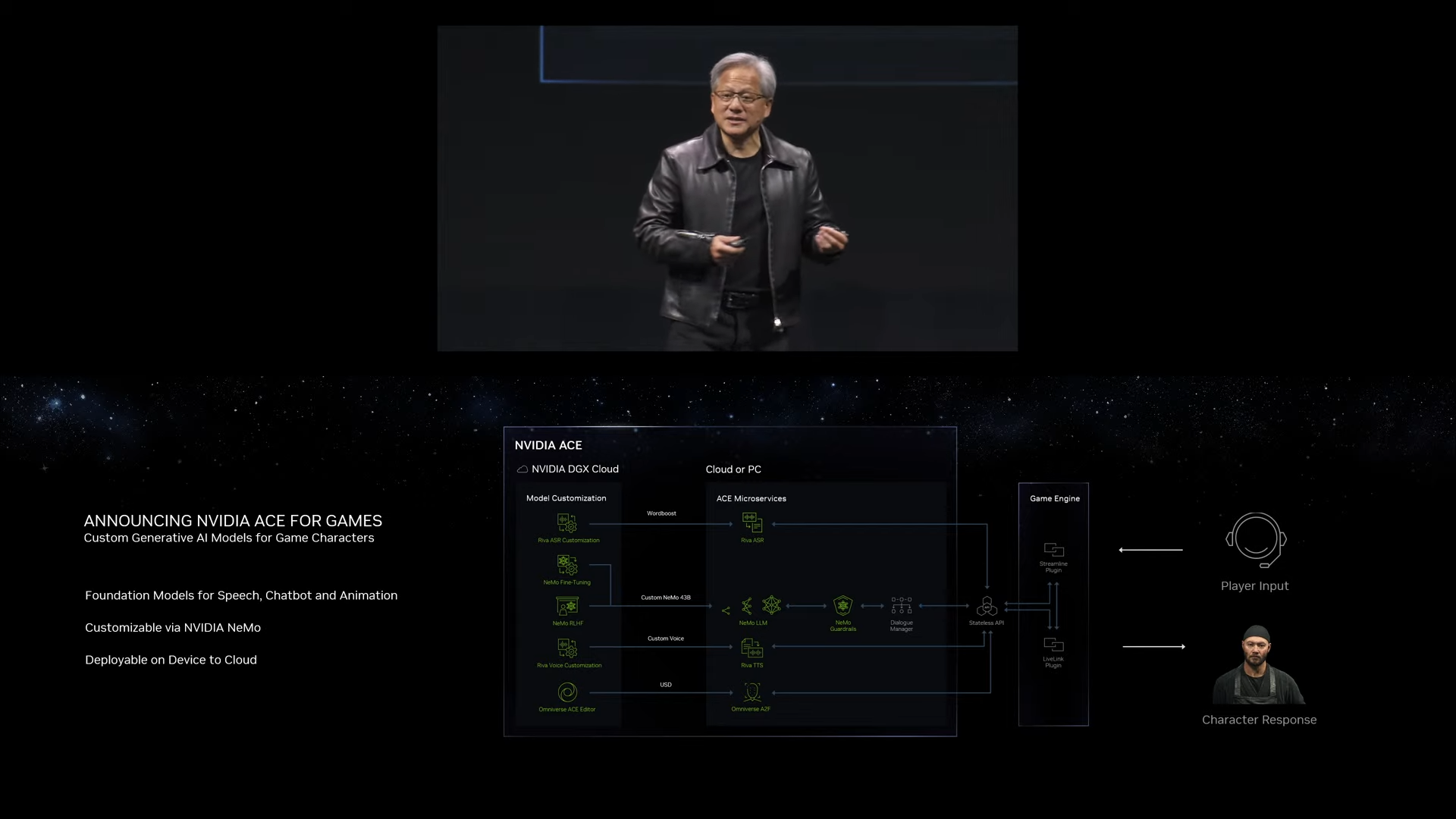

NVIDIA Announces ACE for Games with AI-Generated NPC Interactions

NVIDIA has just announced its next-gen AI model rendering tool called NVIDIA Ace at Computex 2023.

NVIDIA Ace would let developers make characters that move and act like real people and aren’t limited by scripting. They would respond to the players in real time, and is aimed purely at efficiency, and immersion.

Jensen Huang, CEO of NVIDIA, said that characters in games powered by NVIDIA Ace are no longer scripted, but instead converse in real-time. The language for this model might require some working by the developers, but the overall experience looks like a huge step forward for AI-generated content.

During the event, we got a glimpse at a demo (named Kairos) for a game using ACE. We can clearly see how Jin, an NPC in the demo, responds accurately and consistently to natural language enquiries, thanks to generative AI.

ACE stands for Avatar Cloud Engine, and for this tool, NVIDIA has collaborated with Convai, a company focusing on conversational AI for games.

According to NVIDIA’s official press release,

Building on NVIDIA Omniverse™, ACE for Games delivers optimized AI foundation models for speech, conversation and character animation, including:

• NVIDIA NeMo™ — for building, customizing and deploying language models, using proprietary data. The large language models can be customized with lore and character backstories, and protected against counterproductive or unsafe conversations via NeMo Guardrails.

• NVIDIA Riva — for automatic speech recognition and text-to-speech to enable live speech conversation.

• NVIDIA Omniverse Audio2Face™ — for instantly creating expressive facial animation of a game character to match any speech track. Audio2Face features Omniverse connectors for Unreal Engine 5, so developers can add facial animation directly to MetaHuman characters.

NVIDIA’s says that it’s generative AI solutions are already being used by the gaming industry and some of startup businesses. As an example, GSC Game World has used Audio2Face to animate the facial expressions of its characters in the upcoming S.T.A.L.K.E.R. 2: Heart of Chernobyl.

Furthermore, indie game developer Fallen Leaf is also using Audio2Face to make the characters move and talk like real people, in Fort Solis, a game set on Mars.

While this is all we know for now, rest assured that we will keep you updated as new information becomes available.